NeARportation

NeARportation: A Remote Real-time Neural Rendering Framework

(ACM VRST 2022)

Project Page

https://www.ar.c.titech.ac.jp/projects/nearportation-2022

Abstract

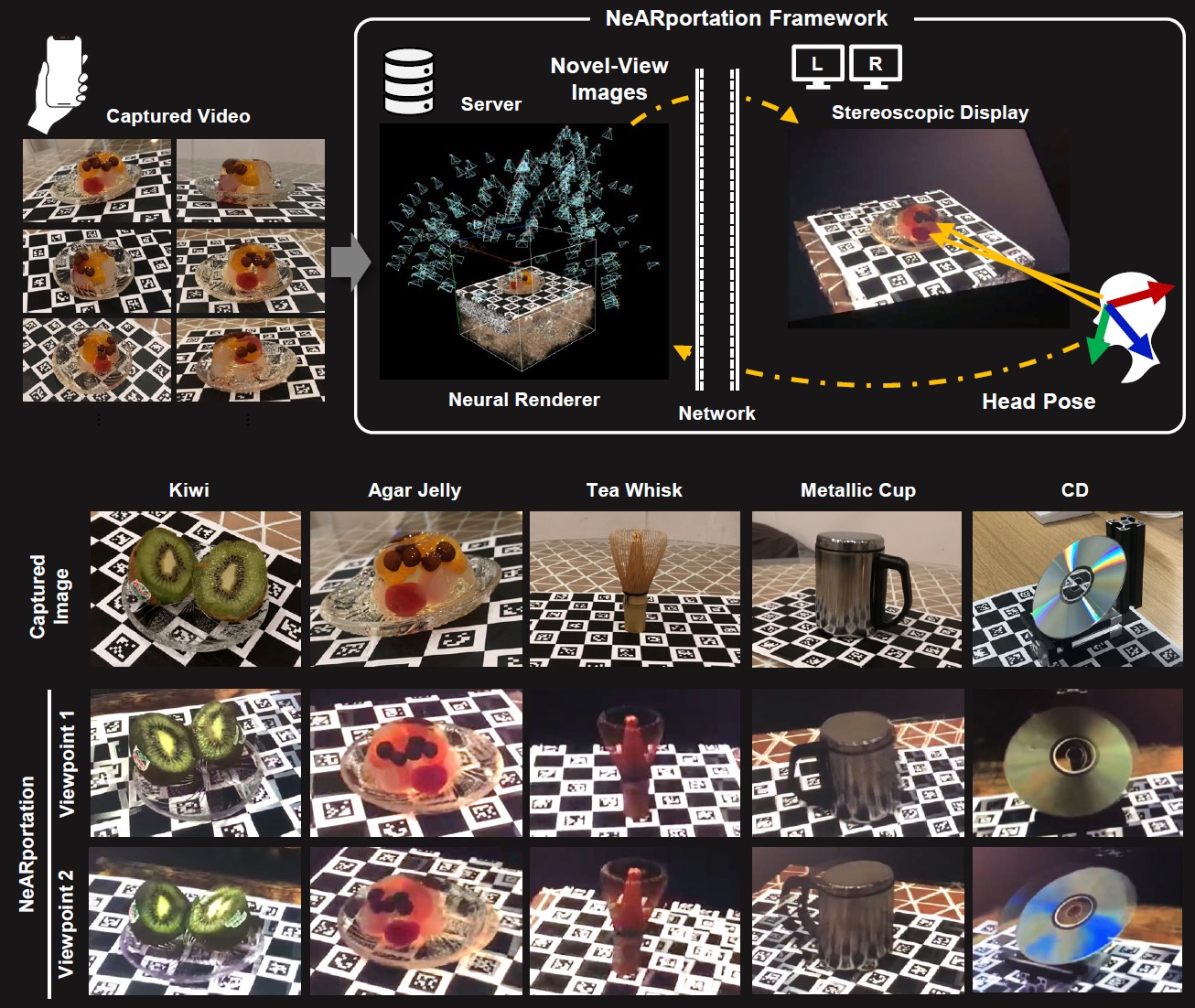

While the presentation of photo-realistic appearance plays a major role in immersion in an augmented virtuality environment, displaying the photo-realistic appearance of real objects remains a challenging problem. Recent developments in photogrammetry have facilitated the incorporation of real objects into virtual space. However, photo-realistic photogrammetry requires a dedicated measurement environment, and there is a trade-off between measurement cost and quality. Furthermore, even with photo-realistic appearance measurements, there is a trade-off between rendering quality and framerate. There is no framework that could resolve these trade-offs and easily provide a photo-realistic appearance in real-time. Our NeARportation framework combines server-client bidirectional communication and neural rendering to resolve these trade-offs. Neural rendering on the server receives the client's head posture and generates a novel-view image with realistic appearance reproduction, which is streamed onto the client's display. By applying our framework to a stereoscopic display, we confirmed that it could display a high-fidelity appearance on full-HD stereo videos at 35-40 frames-per-second (fps), according to the user's head motion.

Publications

- Yuichi Hiroi, Yuta Itoh, Jun Rekimoto, “NeARportation: A Remote Real-time Neural Rendering Framework”, In Proceedings of International Conference of Virtual Reality Software Technology 2022 (VRST 2022), 23:1-23:5, Tsukuba, Japan, Nov. 29-Dec.1, 2022.