Neural Distortion Fields

Neural Distortion Fields for Spatial Calibration of Wide Field-of-View Near-Eye Displays

(OSA Optics Express 2022)

Project Page

https://www.ar.c.titech.ac.jp/projects/neural-distortion-fields-2022

Abstract

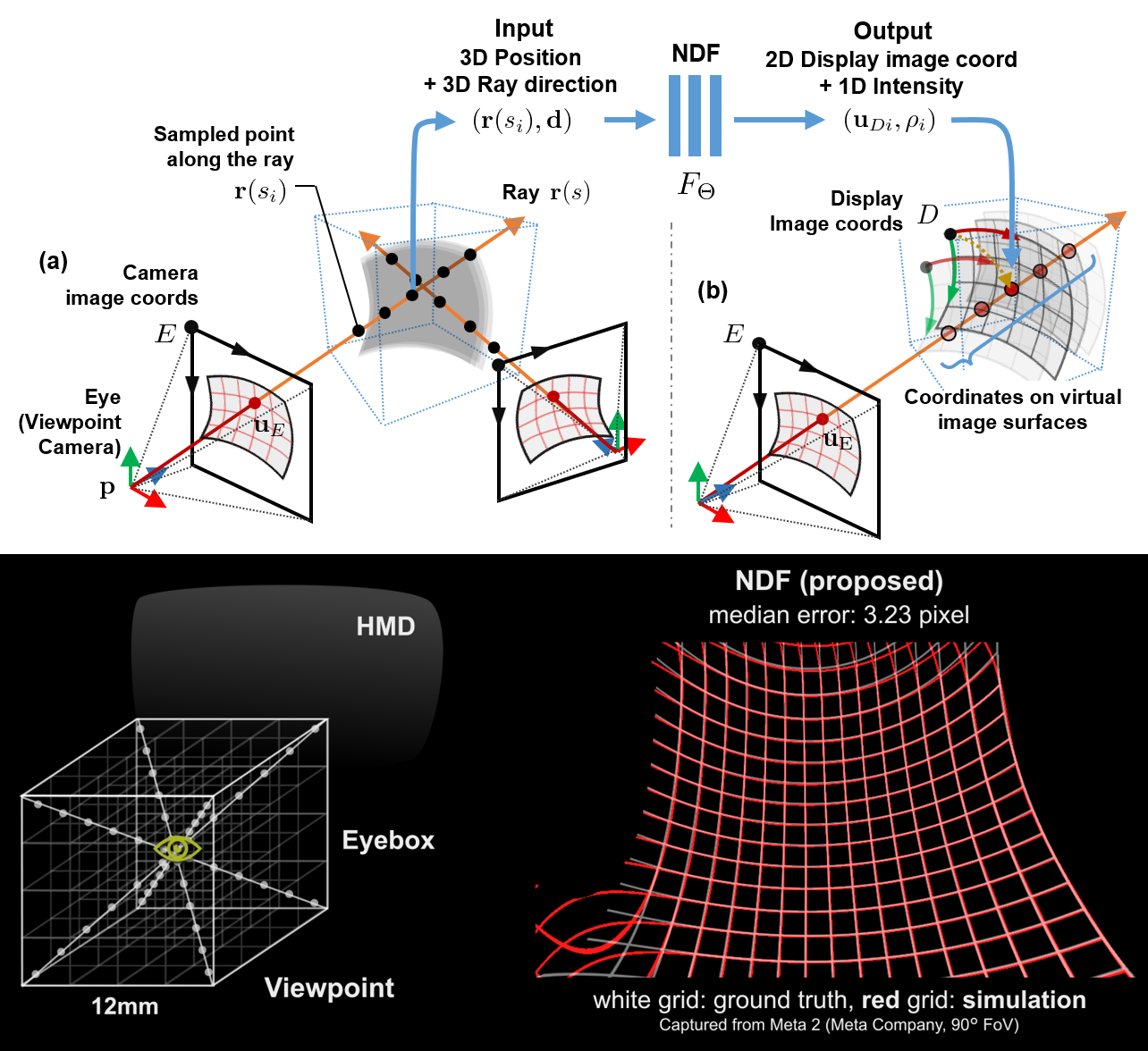

We propose a spatial calibration method for wide Field-of-View (FoV) Near-Eye Displays (NEDs) with complex image distortions. Image distortions in NEDs can destroy the reality of the virtual object and cause sickness. To achieve distortion-free images in NEDs, it is necessary to establish a pixel-by-pixel correspondence between the viewpoint and the displayed image. Designing compact and wide-FoV NEDs requires complex optical designs. In such designs, the displayed images are subject to gaze-contingent, non-linear geometric distortions, which explicit geometric models can be difficult to represent or computationally intensive to optimize. To solve these problems, we propose Neural Distortion Field (NDF), a fully-connected deep neural network that implicitly represents display surfaces complexly distorted in spaces. NDF takes spatial position and gaze direction as input and outputs the display pixel coordinate and its intensity as perceived in the input gaze direction. We synthesize the distortion map from a novel viewpoint by querying points on the ray from the viewpoint and computing a weighted sum to project output display coordinates into an image. Experiments showed that NDF calibrates an augmented reality NED with 90$^{\circ}$ FoV with about 3.23 pixel (5.8 arcmin) median error using only 8 training viewpoints. Additionally, we confirmed that NDF calibrates more accurately than the non-linear polynomial fitting, especially around the center of the FoV.

Publications

- Yuichi Hiroi, Kiyosato Someya, Yuta Itoh, “Neural Distortion Fields for Spatial Calibration of Wide Field-of-View Near-Eye Displays”, OPTICA (OSA) Optics Express, Vol. 30, Issue 22, pp. 40628–40644, 2022.